I frequently write about cool research that’s being done in literary studies (usually at the intersection of literary studies and something else), but I thought I’d take a minute and talk about stuff that, in my humble opinion, lit studies scholars should be digging into more.

Consider it a wish list for stuff I’d like to read, stuff I’d like to supervise PhD students in, stuff I’d like to collaborate on.

Historical and Cultural Analysis

I carried out my own postgraduate research largely in this area, which focuses on how digital technologies have changed (and continue to change) how we think, how we consume media, how we relate to one another, and how we produce literature. There is a wealth of this kind of exploration to be done, applying relatively traditional modes of historical and cultural analysis to texts while also engaging the digital as a cultural and historical matrix of forces (for example examining the influence of informational networks in shaping writing practices).

Many works from the advent of print to now can be understood differently by placing them in relation to the aesthetics and narrative structures of digitality and the internet; for example, how have changes in book formats changed the profession of writing? Of teaching writing? And what is likely to be the future of the digital-literary economy?

In particular, there are gaps in critical analysis of segments of the literary commercial economy relative to emergent technologies, including the cultural and historical functions of writers, publishers, printers, universities, bookshops, scholars, festivals, and educators, to name a few. Digital archives get a lot of scholarly focus, but there is less critical literary attention in areas like crowdsourced writing, literary games, literature-focused social networks, writing in games, virtual reality, and literary apps—all areas ripe for exploration.

Collaborative Resources, Especially Open Source

A growing area of research in the humanities and lit studies in particular involves the creation of literary resources, including resources like digital editions and archives of historical and literary texts, but also more experimental work involving imaging, web development, and hypermedia publication.

Projects like Amanda Visconti’s Infinite Ulysses (an interactive, collaborative annotated version of Joyce’s Ulysses) demonstrate how innovative electronic editions can make works more accessible within and beyond the university, while also revealing patterns of interaction and new modes of experience that can inform research. Hybrids of archives and databases are also an emerging and important area, where for example a particular author’s works might be presented along with archival evidence to create a new kind of searchable, interactive research document.

Researchers can also be mentored toward creating or simply using collaborative platforms, where scholars and researchers from around the world can work simultaneously on large and historically significant literary works (an example is the work being done, led by the National Centre for Scientific Research in Paris, digitally editing the Liber Glossarum).

Another area of interest for me personally has been the development of digital archival resources that integrate elements of social media, wikis and other means of sourcing user content, particularly through the development of user communities around the resource. There is real potential to create “social” digital archives, ones that allow users to collaborate, contribute, tag, share, edit and explore literary texts, and networks of texts. Such an archive could take cues from, for example, the New York Public Library’s “NYPL Labs” initiative, which has harnessed user data to create numerous no-frills, highly usable resources for engaging with collections and connected materials.

This is relevant not just for study for also for members of the public, and the resource becomes richer and more valuable as more people contribute. The beauty of the user-driven archive is that, by being created as essentially an open-source repository, having a polished (read: expensive) interface is unnecessary. Value for users arrives in the form of user engagement, annotations, discussions, and a community of knowledge-sharing.

The same rules apply as do in open-source software communities, where users build an excellent resource simply for the joy of participating and adding to the resource. Key to this kind of research project would be the building of a community of users within and outside a literature department, and researchers carrying out this kind of study could partner with open source repositories, for example, whose methods of organizing content and engaging contributors could serve as an interesting model for humanities archiving.

While encouraging this kind of making-focused research is potentially intimidating within a traditional literature department, expertise and knowledge in emerging technologies and computing can be drawn from other parts of the university (or private sector) in meaningful collaborations. There’s also immense value in having resources like multimedia teaching labs, ideally with design and development tech support available, and running departmental workshops for researchers on methods in related areas.

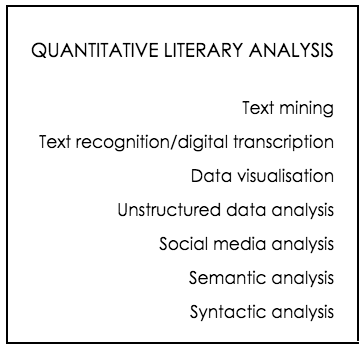

Quantitative Analysis and Computing

A number of new literary analytic methods have emerged in the last twenty years that adopt the tools of social science and computing to process the text as data. One of the most well-known of these is this study carried out by researchers from three universities in Poland, who used a computer program to analyse the full texts of a number of literary works including books Finnegans Wake, The Waves, The New Testament, and Tristram Shandy for evidence of mathematical fractal properties. These authors found structures that could be described as mathematical fractals or even multifractals in texts including Finnegans Wake and The Waves, on one end of the spectrum, while books including the Bible and Tristram Shandy were found not to contain fractal structures.

A number of academics and developers are currently working to create tools that make this kind of quantitative, data-driven humanities research easier, by for example creating interfaces that can be used by non-specialists for text mining and visualisation, and programs for mining unstructured internet text, particularly social media.

The challenge in encouraging this kind of research again is again one of necessary integration of interdisciplinary methods, which requires in general two supervisors and/or access to the resources of two or more departments, in order to create a robust study. However, researchers can be encouraged to carry out projects situated between computing and humanities departments in ways that allow them to develop relevant skill sets—basic coding, for example, or the use of social science analytic frameworks—that will outlast any “user-friendly” platforms that might arise, and more importantly will contribute to a fuller mechanical understanding of the quantitative or character-based analytic process as it can be applied to the literary text.

Making new researchers aware of available tools is another challenge, as technology is constantly changing, which makes the idea of speculative student projects in emerging tech somewhat daunting. However, literature departments can counter this by for example hosting speaker series on topics in humanities data analysis and links with emerging technologies. Joint workshops and modules can also be built between departments, or social science and computing methods training modules can be included as options on arts degrees, in order to stimulate new, boundary-crossing research approaches.

Has any of this sparked inspiration? Feel free to get in touch if you want to cook something up!